Track, measure, improve

Most sport and esports organizations are focused on winning. But winning in a sustainable way can only be achieved with continuous improvement and iteration. Said in another way, with constant growth.

So esports teams, or any person, team or company based on performance are growth focused. Everything they do is aimed at growing as fast as possible. Growth in this context doesn’t mean increasing size or volume (hiring more people). It means increasing knowledge and the ability to execute that knowledge.

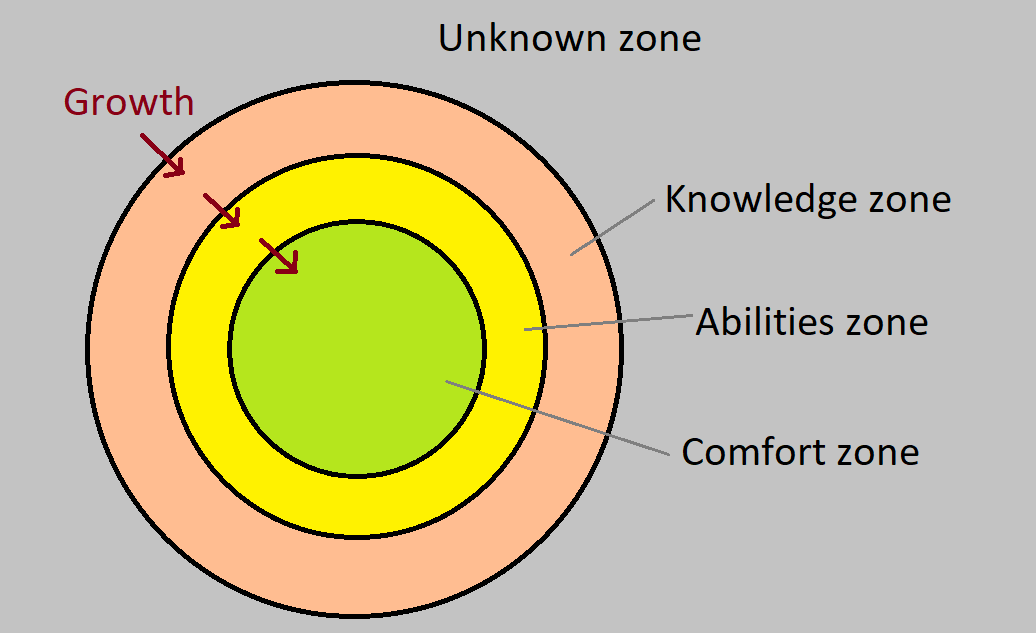

The process from increasing knowledge to performing sustainably to the greatest of our abilities is what I call UKAC:

- Unknown zone – What you don’t know

- Knowledge zone – What you are conscious about but don’t know how to execute

- Abilities zone – All you know how to do (your performance ceiling)

- Comfort zone – What you can do reliably

So being growth focused means navigating the UKAC. But that’s not enough, because everyone is already doing this (or at least trying it). You have to do it fast. Actually, as fast as possible.

The person, team or company that learns to implement this faster will have a competitive advantage in the short term. And inevitably will end up being the best.

If You Can’t Measure It, You Can’t Improve It.

Esports orgs, apart from being growth focused they are also data monsters. By data monsters I mean that they generate an insane amount of data that is easy to capture and process. We train and compete with computers and computers are much better than humans at processing data.

The title of this section is a quote by Peter Drucker a management consultant, educator, and author. It perfectly fits the topic but I’d tweak it a bit. I’d say:

“If you can’t track it, you can’t measure it and you can’t improve it.”

I feel that tracking is one of the biggest issues in esports orgs right now. They are aware that there’s a ton of data of useful data “floating” around. But there’s so much that it’s confusing.

Most orgs don’t know what data is relevant, how to capture it and process it. It ends up being massively time consuming and the data ends up being useless.

The orgs that are able to go through the following loop faster will become the future powerhouses.

- Capture the correct info

- Process it

- Reach conclusions and create hypothesis

- Design test

- Execute the test

- Repeat

The data is being generated every second and it’s massive. It contains the insights we need to accelerate growth.

The org that figures out (that invests heavily in it) will end up having a massive advantage over the rest.

Hello everyone,

I’m so happy a friend of mine showed me this article.

The process you are describing here is the cornerstone I use when I’m coaching players. I’m glad to see that it also applies for companies as it exposes data that is much more meaningful.

Too often, players rely on information that does not mean anything to assess their performance. These metrics are called “vanity metrics” :

– Games won / games lost ratio

– Did I make more kills than my teammates ?

– Did I die less ?

– …

This kind of data is completely useless if you want to be a better player. The same way an esport company would mainly use the “how many tournaments did we win ?” metric. It does not tell you what you need to improve in order to perform better. It does not allow you to take actions in order to be better, because such metric doesn’t grant any vision on the situation.

I love the third step : “Reach conclusions and create hypothesis”. This step allows you to learn so much on what is working and what is not. It also allows you to determine what to measure, to determine what is called “actionable metrics”, and to use measurements that actually mean something and improve your knowledge so that you can enter the “abilities zone” in the UKAC graph.

Thank you for this amazing article, have a good day!

I’m not a big fan of this messurment movement, because in business they a lot messure bullshit and decide on it. Because it MUST be measureable!

And on the other hand only facts are taken into decisions that easily can be messured and a lot of (not measurable) opportunities are not taken into decisions. That’s how opportunities get lost.

Between 5 and 6 is an Important Step Missing. Validate the test results. Did the test deliver what we wanted to learn? Yes: what did we learn? No: How do we try to test the hypothesis in another way?